Apache Avro file format created by Doug cutting is a data serialization system for Hadoop. Avro provides simple

integration with dynamic languages. Avro implementations for C, C++, C#, Java, PHP, Python, and Ruby are available.

Avro file

Avro file has two things-

- Data definition (Schema)

- Data

Both data definition and data are stored together in one file. With in the Avro data there is a header, in that there

is a metadata section where the schema is stored. All objects stored in the file must be written according to that schema.

Avro Schema

Avro relies on schemas for reading and writing data. Avro schemas are defined with JSON that helps in data interoperability.

Schemas are composed of primitive types (null, boolean, int, long, float, double, bytes, and string) and

complex types (record, enum, array, map, union, and fixed).

While defining schema you can write it in a separate file having .avsc extension.

Avro Data

Avro data is serialized and stored in binary format which makes for a compact and efficient storage. Avro data itself

is not tagged with type information because the schema used to write data is always available when the data is read.

The schema is required to parse data. This permits each datum to be written with no per-value overheads, making

serialization both fast and small.

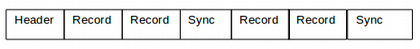

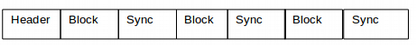

Avro file format

Avro specifies an object container file format. A file has a schema, and all objects stored in the file must be

written according to that schema, using binary encoding.

Objects are stored in blocks that may be compressed. Synchronization markers are used between blocks to permit

efficient splitting of files for MapReduce processing.

A file consists of:

- A file header, followed by

- one or more file data blocks

Following image shows the Avro file format.

| Header |

Data block |

Data block |

……. |

Avro file header consists of:

- Four bytes, ASCII 'O', 'b', 'j', followed by 1.

- File metadata, including the schema.

- The 16-byte, randomly-generated sync marker for this file.

A file header is thus described by the following schema:

{"type": "record", "name": "org.apache.avro.file.Header",

"fields" : [

{"name": "magic", "type": {"type": "fixed", "name": "Magic", "size": 4}},

{"name": "meta", "type": {"type": "map", "values": "bytes"}},

{"name": "sync", "type": {"type": "fixed", "name": "Sync", "size": 16}},

]

}

A file data block consists of:

- A long indicating the count of objects in this block.

- A long indicating the size in bytes of the serialized objects in the current block, after any codec is applied

- The serialized objects. If a codec is specified, this is compressed by that codec.

- The file's 16-byte sync marker.

How schema is defined in Avro

Avro schema is defined using JSON and consists of-

- A JSON string, naming a defined type.

- A JSON object, of the form:

{"type": "typeName" ...attributes...}

where typeName is either a primitive or derived type name, as defined below. Attributes not defined in this document

are permitted as metadata, but must not affect the format of serialized data.

- A JSON array, representing a union of embedded types.

Primitive Types in Avro

Primitive types used in Avro are as follows-

- null: no value

- boolean: a binary value

- int: 32-bit signed integer

- long: 64-bit signed integer

- float: single precision (32-bit) IEEE 754 floating-point number

- double: double precision (64-bit) IEEE 754 floating-point number

- bytes: sequence of 8-bit unsigned bytes

- string: unicode character sequence

As example if you are defining field of type String

{"name": "personName", "type": "string"}

Complex Types in Avro

Avro supports six kinds of complex types: record, enum, array, map, union and fixed.

record- Records are defined using the type name "record" and support following attributes:

- name- A JSON string providing the name of the record, this is a required attribute.

- doc- A JSON string providing documentation to the user of this schema, this is an optional attribute.

- aliases- A JSON array of strings, providing alternate names for this record, this is an optional attribute.

- fields- A JSON array, listing fields, this is a required attribute.

Each field in Record is a JSON object with the following attributes:

- name- A JSON string providing the name of the field, this is a required attribute.

- doc- A JSON string describing this field for users, this is an optional attribute.

- type- A JSON object defining a schema, or a JSON string naming a record definition, this is a required attribute.

- default- A default value for this field, used when reading instances that lack this field, this is an optional attribute.

- order- Specifies how this field impacts sort ordering of this record, this is an optional attribute.

Valid values are "ascending" (the default), "descending", or "ignore".

- aliases- A JSON array of strings, providing alternate names for this field, this is an optional attribute.

As example schema for Person having Id, Name and Address fields.

{

"type": "record",

"name": "PersonRecord",

"doc": "Person Record",

"fields": [

{"name":"Id", "type":"long"},

{"name":"Name", "type":"string"},

{"name":"Address", "type":"string"}

]

}

enum- Enums use the type name "enum" and support the following attributes:

- name- A JSON string providing the name of the enum, this is a required attribute. namespace, a

JSON string that qualifies the name;

- aliases- A JSON array of strings, providing alternate names for this enum, this is an optional attribute.

- doc- a JSON string providing documentation to the user of this schema, this is an optional attribute.

- symbols- A JSON array, listing symbols, as JSON strings, this is a required attribute. All symbols in an enum must be

unique; duplicates are prohibited.

For example, four seasons can be defined as:

{ "type": "enum",

"name": "Seasons",

"symbols" : ["WINTER", "SPRING", "SUMMER", "AUTUMN"]

}

array- Arrays use the type name "array" and support a single attribute:

- items- The schema of the array's items.

For example, an array of strings is declared with:

{"type": "array", "items": "string"}

map- Maps use the type name "map" and support one attribute:

- values- The schema of the map's values.

Map keys are assumed to be strings. For example, a map from string to int is declared with:

{"type": "map", "values": "int"}

union- Unions are represented using JSON arrays. For example, ["null", "string"] declares a schema which may be either

a null or string. Avro data confirming to this union should match one of the schemas represented by union.

fixed- Fixed uses the type name "fixed" and supports following attributes:

- name- A string naming this fixed, this is a required attribute. namespace, a string that qualifies the name;

- aliases- A JSON array of strings, providing alternate names for this enum, this is an optional attribute.

- size- An integer, specifying the number of bytes per value, this is a required attribute.

For example, 16-byte quantity may be declared with:

{"type": "fixed", "size": 16, "name": "md5"}

Data encoding in Avro

Avro specifies two serialization encodings: binary and JSON. Most applications will use the binary encoding, as it

is smaller and faster.

Reference:

https://avro.apache.org/docs/1.8.2/index.html

That's all for this topic Apache Avro Format in Hadoop. If you have any doubt or any suggestions to make please drop a comment. Thanks!

Related Topics

-

Parquet File Format in Hadoop

-

Sequence File in Hadoop

-

How to Configure And Use LZO Compression in Hadoop

-

File Write in HDFS - Hadoop Framework Internal Steps

-

Java Program to Read File in HDFS

You may also like-

-

How to Check Hadoop MapReduce Logs

-

How to Compress Intermediate Map Output in Hadoop

-

Shuffle And Sort Phases in Hadoop MapReduce

-

What is SafeMode in Hadoop

-

How to Create Ubuntu Bootable USB

-

Java Multi-Threading Interview Questions

- Difference Between ArrayList And CopyOnWriteArrayList in Java

-

Invoking Getters And Setters Using Reflection - Java Program