Apart from text files Hadoop framework also supports binary files. One of the binary file format in Hadoop is Sequence file which is a flat file consisting of binary key/value pairs.

- Advantages of SequenceFile in Hadoop

- Compression types used in Sequence files

- Hadoop SequenceFile Formats

- Uncompressed SequenceFile Format

- Record-Compressed SequenceFile Format

- Block-Compressed SequenceFile Format

- sync-marker in SequenceFile

- SequenceFile class (Java API) in Hadoop framework

- SequenceFile with MapReduce

Advantages of SequenceFile in Hadoop

- Since sequence file stores data in the form of serialized key/value pair so it is good for storing images, binary data. Also good for storing complex data as (key, value) pair where complex data is stored as value and ID as key.

- Since data is stored in binary form so more compact and takes less space than text files.

- Sequence file is the native binary file format supported by Hadoop so extensively used in MapReduce as input/output formats. In fact with in the Hadoop framework internally, the temporary outputs of maps are stored using SequenceFile.

- Sequence files in Hadoop support compression at both record and block levels. You can also have uncompressed sequence file.

- Sequence files also support splitting. Since sequence file is not compressed as a single file unit but at record or block level, so splitting is supported even if the compression format used is not splittable like gzip, snappy.

- One of the use of the sequence file is to use it as a container for storing large number of small files. Since HDFS and MapReduce works well with large files rather than large number of small files so using Sequence file to wrap small files helps in effective processing of small files.

Compression types used in Sequence files

Sequence file in Hadoop offers compression at following levels.

- No Compression- Uncompressed key/value records.

- Record compressed key/value records- Only 'values' are compressed when Record compression is used.

- Block compressed key/value records- Both keys and values are

collected in 'blocks' separately and compressed. The size of the 'block'

is configurable. Following property in core-site.xml has to be

configured.

io.seqfile.compress.blocksize– The minimum block size for compression in block compressed SequenceFiles. Default is 1000000 bytes (1 million bytes).

Hadoop SequenceFile Formats

There are 3 different formats for SequenceFiles depending on the CompressionType specified. Header remains same across all the three different formats.

SequenceFile Header

version- 3 bytes of magic header SEQ, followed by 1 byte of actual version number (e.g. SEQ4 or SEQ6)

keyClassName- key class

valueClassName- value class

compression- A boolean which specifies if compression is turned on for keys/values in this file.

blockCompression - A boolean which specifies if block-compression is turned on for keys/values in this file.

compression codec- CompressionCodec class which is used for compression of keys and/or values (if compression is enabled).

metadata- SequenceFile.Metadata for this file.

sync- A sync marker to denote end of the header.

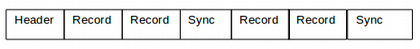

Uncompressed SequenceFile Format

- Header

- Record

- Record length

- Key length

- Key

- Value

- A sync-marker every few 100 bytes or so.

Record-Compressed SequenceFile Format

- Header

- Record

- Record length

- Key length

- Key

- Compressed Value

- A sync-marker every few 100 bytes or so.

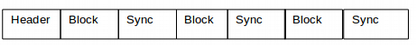

Block-Compressed SequenceFile Format

- Header

- Record Block

- Uncompressed number of records in the block

- Compressed key-lengths block-size

- Compressed key-lengths block

- Compressed keys block-size

- Compressed keys block

- Compressed value-lengths block-size

- Compressed value-lengths block

- Compressed values block-size

- Compressed values block

- A sync-marker every block.

sync-marker in SequenceFile

In these formats if you have noticed there is a sync-marker which is inserted after every few 100 bytes in case no compression is used or Record compression is used. In case of block compression a sync-marker is inserted after every block. Using these sync-marks a reader can seek any random point in sequence file. This helps in splitting a large sequence file for parallel processing by MapReduce.

SequenceFile class (Java API) in Hadoop framework

Main class for working with sequence files in Hadoop is org.apache.hadoop.io.SequenceFile.

SequenceFile provides SequenceFile.Writer, SequenceFile.Reader and SequenceFile.Sorter classes for writing, reading and sorting respectively. There are three SequenceFile Writers based on the SequenceFile.CompressionType used to compress key/value pairs:

- Writer : Uncompressed records.

- RecordCompressWriter : Record-compressed files, only compress values.

- BlockCompressWriter : Block-compressed files, both keys & values are collected in 'blocks' separately and compressed.

Rather than using these Writer classes directly recommended way is to use the static createWriter methods provided by the SequenceFile to chose the preferred format.

The SequenceFile.Reader acts as the bridge and can read any of the above SequenceFile formats.

Refer How to Read And Write SequenceFile in Hadoop to see example code for reading and writing sequence files using Java API and MapReduce.

SequenceFile with MapReduce

When you have to use SequenceFile as input or output format with MapReduce you can use the following classes.

SequenceFileInputFormat- An InputFormat for SequenceFiles. When you want to use data from sequence files as the input to MapReduce.

SequenceFileAsTextInputFormat- Similar to SequenceFileInputFormat except that it converts the input keys and values to their String forms by calling toString() method.

SequenceFileAsBinaryInputFormat- This class is similar to SequenceFileInputFormat, except that it reads keys, values from SequenceFiles in binary (raw) format.

SequenceFileOutputFormat- An OutputFormat that writes SequenceFiles.

SequenceFileAsBinaryOutputFormat- An OutputFormat that writes keys, values to SequenceFiles in binary(raw) format.

Reference: https://hadoop.apache.org/docs/current/api/org/apache/hadoop/io/SequenceFile.htmlThat's all for this topic Sequence File in Hadoop. If you have any doubt or any suggestions to make please drop a comment. Thanks!

>>>Return to Hadoop Framework Tutorial Page

Related Topics

You may also like-

No comments:

Post a Comment