This post gives an overview of HDFS High Availability (HA), why it is required and how HDFS High Availability can be managed.

Problem with single NameNode

To guard against the vulnerability of having a single NameNode in a Hadoop cluster there are options like setting up a Secondary NameNode to take up the task of merging the FsImage and EditLog or to have an HDFS federation to have separate NameNodes for separate namespaces. Still, having a backup NameNode is something that was missing and the NameNode was a single point of failure (SPOF) in an HDFS cluster. This impacted the total availability of the HDFS cluster in two major ways:

- In the case of an unplanned event such as a machine crash, the cluster would be unavailable until an operator restarted the NameNode.

- In case of NameNode planned maintenance for any software or hardware upgrades would result in windows of cluster downtime.

In these cases a new NameNode will be able to start service requests only after-

- Loading the FsImage into memory and merging all the transactions stored in EditLog.

- Getting enough block reports from DataNodes as per configuration to leave safemode.

In Hadoop 2.x release a new feature HDFS High Availability is introduced that addresses the above problems by providing the option of running two redundant NameNodes in the same cluster in an Active/Passive configuration.

HDFS High Availability architecture

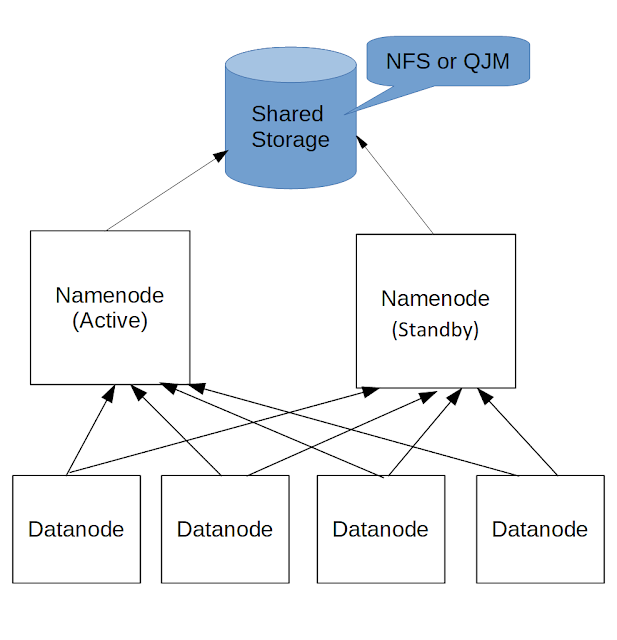

With HDFS high availability two separate machines are configured as NameNodes in a cluster.

Out of these two NameNodes, at any point in time, exactly one of the NameNodes is in an Active state and responsible for all client operations in the cluster.

The other NameNode remains in a Standby state. It has to maintain enough state to provide a fast failover if necessary.

For the standby NameNode to keep its state synchronized with the Active node both nodes should have access to an external shared entity. For this shared access there are two options-

- Quorum Journal Manager (QJM)

- Shared NFS directory

General concept in both of these options is same whenever any namespace modification is performed by the Active node, it logs a record of the modification to the shared access too. Standby node reads those edits from the shared access and applies them to its own namespace.

That way both the Namenodes are synchronized and standby NameNode can be promoted to the Active state in the event of a failover.

Both of the Namenodes should also have the location of all blocks in the Datanodes. To keep that block mapping information up-to-date DataNodes are configured with the location of both NameNodes, and send block location information and heartbeats to both.

Shared access with NFS

If you are using NFS directory as shared access then it is required that both the NameNodes have access to that NFS directory.

Any namespace modification performed by the Active node is looged to edit log file stored in the shared directory. The Standby node is constantly watching this directory for edits, and as it sees the edits, it applies them to its own namespace.

Shared access with QJM

In case of QJM both nodes communicate with a group of separate daemons called “JournalNodes” (JNs). When any namespace modification is performed by the Active node, it durably logs a record of the modification to a majority of these JNs.

The Standby node is capable of reading the edits from the JNs, and is constantly watching them for changes to the edit log. As the Standby Node sees the edits, it applies them to its own namespace.

There must be at least 3 JournalNode daemons, since edit log modifications must be written to a majority of JNs. This will allow the system to tolerate the failure of a single machine. You may also run more than 3 JournalNodes, but in order to actually increase the number of failures the system can tolerate, you should run an odd number of JNs, (i.e. 3, 5, 7, etc.). Note that when running with N JournalNodes, the system can tolerate at most (N - 1) / 2 failures and continue to function normally.

Configuration for HA cluster

The configuration changes required for high availability NameNodes in Hadoop are as follows. Changes are required in hdfs-site.xml configuration file.

dfs.nameservices – Choose a logical name for this nameservice, for example “mycluster”

<property> <name>dfs.nameservices</name> <value>mycluster</value> </property>

Then you need to configure the NameNodes suffixed with the nameservice ID. If the individual ids of the Namenodes are namenode1 and namenode2 and the nameservice ID is mycluster.

<property> <name>dfs.ha.namenodes.mycluster</name> <value>namenode1,namenode2</value> </property>

You also need to provide fully-qualified RPC address and fully-qualified HTTP address for each NameNode to listen on.

<property> <name>dfs.namenode.rpc-address.mycluster.namenode1</name> <value>machine1.example.com:8020</value> </property> <property> <name>dfs.namenode.http-address.mycluster.namenode1</name> <value>machine1.example.com:50070</value> </property> <property> <name>dfs.namenode.rpc-address.mycluster.namenode2</name> <value>machine2.example.com:8020</value> </property> <property> <name>dfs.namenode.http-address.mycluster.namenode2</name> <value>machine2.example.com:50070</value> </property>To provide the location of the shared storage.

In case of QJM with 3 machines.

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://node1.example.com:8485;node2.example.com:8485;

node3.example.com:8485/mycluster</value>

</property>

In case of NFS

<property> <name>dfs.namenode.shared.edits.dir</name> <value>file:///mnt/filer1/dfs/ha-name-dir-shared</value> </property>

dfs.client.failover.proxy.provider.[nameservice ID] - the Java class that HDFS clients use to contact the Active NameNode. The two implementations which currently ship with Hadoop are the ConfiguredFailoverProxyProvider and the RequestHedgingProxyProvider. So use one of these unless you are using a custom proxy provider.

<property> <name>dfs.client.failover.proxy.provider.mycluster</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property>

Handling NameNode failover in HDFS HA

In case of failover the Standby node should be promoted to active state where as the previously active NameNode should transition to standby mode.

That failover can be managed manually by using the following command.

hdfs haadmin -failover

This command will initiate a failover between two NameNodes. If the first NameNode is in the Standby state, this command simply transitions the second to the Active state without error. If the first NameNode is in the Active state, an attempt will be made to gracefully transition it to the Standby state.

For automatic failover ZooKeeper can be configured. In ZooKeeper there is a ZKFailoverController (ZKFC) process which monitors and manages the state of the NameNode.

Each of the machines which runs a NameNode also runs a ZKFC. The ZKFC pings its local NameNode on a periodic basis with a health-check command.

If the node has crashed, frozen, or otherwise entered an unhealthy state, the health monitor will mark it as unhealthy. In that case failover mechanism is triggered.

Fencing procedure in HDFS High Availability

In a Hadoop cluster, at any given time, only one of the NameNode should be in the active state for the correctness of the system. So, it is very important to ensure that the NameNode that is transitioning from active to standby in HDFS High availability configuration is not active any more.

That is why there is a need to fence the Active NameNode during a failover.

Note that when using the Quorum Journal Manager, only one NameNode is allowed to write to the edit logs in JournalNodes, so there is no potential for corrupting the file system metadata. However, when a failover occurs, it is still possible that the previous Active NameNode could serve read requests to clients.

There are two methods which ship with Hadoop for fencing: shell and sshfence.

sshfence- SSH to the Active NameNode and kill the process. In order for this fencing option to work, it must be able to SSH to the target node without providing a passphrase.

<property> <name>dfs.ha.fencing.methods</name> <value>sshfence</value> </property> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>/home/exampleuser/.ssh/id_rsa</value> </property>

shell- run an arbitrary shell command to fence the Active NameNode. The shell fencing method runs an arbitrary shell command. It may be configured like so:

<property> <name>dfs.ha.fencing.methods</name> <value>shell(/path/to/my/script.sh arg1 arg2 ...)</value> </property>

That's all for this topic HDFS High Availability. If you have any doubt or any suggestions to make please drop a comment. Thanks!

>>>Return to Hadoop Framework Tutorial Page

Related Topics

You may also like-